- ホーム

- Microsoft

- AZ-305J - Designing Microsoft Azure Infrastructure Solutions (AZ-305日本語版)

- Microsoft.AZ-305J.v2024-09-04.q148

- 質問105

有効的なAZ-305J問題集はJPNTest.com提供され、AZ-305J試験に合格することに役に立ちます!JPNTest.comは今最新AZ-305J試験問題集を提供します。JPNTest.com AZ-305J試験問題集はもう更新されました。ここでAZ-305J問題集のテストエンジンを手に入れます。

AZ-305J問題集最新版のアクセス

「430問、30% ディスカウント、特別な割引コード:JPNshiken」

オンプレミスネットワークには、500GBのデータを格納するServer1という名前のファイルサーバーが含まれています。

Server1からAzureStorageにデータをコピーするには、AzureDataFactoryを使用する必要があります。

新しいデータファクトリを追加します。

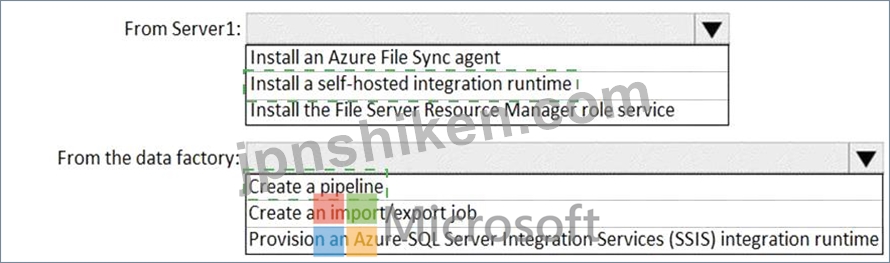

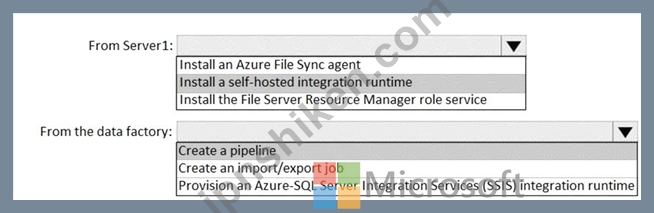

次に何をすべきですか?回答するには、回答領域で適切なオプションを選択します。

注:正しい選択はそれぞれ1ポイントの価値があります。

Server1からAzureStorageにデータをコピーするには、AzureDataFactoryを使用する必要があります。

新しいデータファクトリを追加します。

次に何をすべきですか?回答するには、回答領域で適切なオプションを選択します。

注:正しい選択はそれぞれ1ポイントの価値があります。

正解:

Explanation:

Box 1: Install a self-hosted integration runtime

The Integration Runtime is a customer-managed data integration infrastructure used by Azure Data Factory to provide data integration capabilities across different network environments.

Box 2: Create a pipeline

With ADF, existing data processing services can be composed into data pipelines that are highly available and managed in the cloud. These data pipelines can be scheduled to ingest, prepare, transform, analyze, and publish data, and ADF manages and orchestrates the complex data and processing dependencies References:

https://docs.microsoft.com/en-us/azure/machine-learning/team-data-science-process/move-sql-azure-adf

https://docs.microsoft.com/pl-pl/azure/data-factory/tutorial-hybrid-copy-data-tool syu31svc 3 months, 4 weeks ago

https://docs.microsoft.com/en-us/azure/data-factory/create-self-hosted-integration-runtime?tabs=data-factory

"A self-hosted integration runtime can run copy activities between a cloud data store and a data store in a private network"

https://docs.microsoft.com/en-us/azure/data-factory/introduction

"With Data Factory, you can use the Copy Activity in a data pipeline to move data from both on-premises and cloud source data stores to a centralization data store in the cloud for further analysis"

- 質問一覧「148問」

- 質問1 Contoso、Ltdという名前のMicrosoftボリュームライセンスの顧客...

- 質問2 あなたはSQLデータベースソリューションの設計を食べました。こ...

- 質問3 オンプレミスのUbuntu仮想マシンで実行されるメッセージアプリケ...

- 質問4 AzureWebアプリを設計しています。 Webアプリを北ヨーロッパのAz...

- 質問5 Azure Kubernetes Service(AKS)クラスターでホストされるマイ...

- 質問6 あなたの会社には、VMware環境でホストされている300台の仮想マ...

- 質問7 AzureサブスクリプションでステートレスWebアプリをホストするに...

- 質問8 Azureサブスクリプションがあります。 Linuxノードを使用するAzu...

- 質問9 App1をAzureに移行する予定です。 セキュリティとコンプライアン...

- 質問10 DB1およびDB2という名前の2つのオンプレミスMicrosoftSQLServer...

- 質問11 オンプレミスのデータセンターには、Linux を実行し、Appl とい...

- 質問12 Azure Event Gridにルーティングされたイベントに応答してカスタ...

- 質問13 ストレージアカウントを含むAzureサブスクリプションがあります...

- 質問14 DB1 という名前のオンプレミスの Microsoft SQL Server 2016 デ...

- 質問15 個人情報(Pll)を格納するAzureSQLデータベースを展開すること...

- 質問16 Azure Active Directoryプレミアムプラン2テナントにリンクされ...

- 質問17 ネットワークを集中的に使用するアプリケーションを複数のAzure...

- 質問18 Microsoft Entra ユーザーがオンライン アンケートを作成して公...

- 質問19 次の機能を実装するAzureポリシーを設計する必要があります。 *...

- 質問20 Azure SQLデータベースでTDEが有効になっていることを確認するに...

- 質問21 Contoso、Ltd.という名前の会社は、HTTPトリガーを持ついくつか...

- 質問22 次の構成を含むインフラストラクチャ ソリューションを展開する

- 質問23 Azureサブスクリプションがあります。サブスクリプションには、W...

- 質問24 次の表に示す Azure リソースを含む、Azure での注文処理システ...

- 質問25 Azure Kubernetes Service (AKS) クラスター上のコンテナーで実...

- 質問26 注:この質問は、同じシナリオを提示する一連の質問の一部です。

- 質問27 App2のファイルストレージ要件を満たすソリューションを推奨する...

- 質問28 複数のAzureリージョンにAzureWebアプリの複数のインスタンスを...

- 質問29 WebアクセスログデータをAzureBlobストレージに保存します。 ア...

- 質問30 1,000 個のリソースを含む Azure サブスクリプションがあります...

- 質問31 オンプレミスネットワークとAzureサブスクリプションがあります...

- 質問32 AzureWebアプリの2つのインスタンスをデプロイします。1つのイン...

- 質問33 Applの要件を満たすAppServiceアーキテクチャを推奨する必要があ...

- 質問34 50 個のデータベースをホストする SQLI というオンプレミスの Mi...

- 質問35 Windows Server 2019を実行し、500GBのデータファイルを含むVM1...

- 質問36 次の要件を満たす高可用性 Azure SQL データベースを設計する必...

- 質問37 Azureサブスクリプションへのリソースのデプロイを自動化するこ...

- 質問38 ID要件を満たすために何を実装する必要がありますか?回答するに...

- 質問39 Windows Server 2012 R2を実行し、Microsoft SQL Server2012R2イ...

- 質問40 AzureSQLデータベースを含むAzureサブスクリプションがあります...

- 質問41 次の展示に示すように、APIManagementでOAuth2認証を構成します...

- 質問42 50 個の Azure SQL データベースを含む Azure サブスクリプショ...

- 質問43 注:この質問は、同じシナリオを提示する一連の質問の一部です。

- 質問44 Azure Active Directory(Azure AD)認証を使用するApplという名...

- 質問45 50,000台のIoTデバイスを含むAzureIoTHubソリューションを計画し...

- 質問46 2TBのデータファイルを保存するオンプレミスのファイルサーバー...

- 質問47 あなたの会社には世界中に 50 の事業部があります。事業部は、現...

- 質問48 Oracle データベースを使用する App1 という名前のオンプレミス ...

- 質問49 ビジネスクリティカルなデータを保存する新しいアプリを開発する

- 質問50 Azure サブスクリプションをお持ちです。サブスクリプションには...

- 質問51 App1のデータ要件を満たすソリューションを推奨する必要がありま...

- 質問52 次の表に示すリソースを含む Azure サブスクリプションがありま...

- 質問53 You have two app registrations named App1 and App2 in Azure ...

- 質問54 Microsoft SQL Server インスタンスをバックエンドとして使用す...

- 質問55 ネットワークには、オンプレミスのActiveDirectoryフォレストが...

- 質問56 Azure SQL をデータベース プラットフォームとして使用する予定...

- 質問57 App1 という名前のオンプレミス アプリがあります。 お客様は Ap...

- 質問58 Contoso、Ltd.という名前の会社には、MicrosoftOffice365およびA...

- 質問59 SQL1 というオンプレミスの Microsoft SQL Server データベース...

- 質問60 Azureへの移行を計画しているオンプレミスデータベースがありま...

- 質問61 AzureWebアプリを設計しています。 Webアプリを北ヨーロッパのAz...

- 質問62 Azure Key Vault を使用してデータ暗号化キーを格納する Azure A...

- 質問63 複数のAzureクラウドサービスを含み、トランザクションのさまざ...

- 質問64 あなたの会社には、次の表に示す部門があります。 (Exhibit) Sub...

- 質問65 ストレージアカウントを含むAzureサブスクリプションがあります...

- 質問66 レポートをサポートするデータ ストレージ ソリューションを設計...

- 質問67 複数のAzureSQLデータベースインスタンスをデプロイします。 次...

- 質問68 App1という名前のWebアプリケーションをオンプレミスのデータセ...

- 質問69 Azure Storage アカウントを使用してデータ資産を保存する予定で...

- 質問70 次の表に示すVirtual/WAN1という名前の基本的なAzure仮想WANと仮...

- 質問71 Azure サブスクリプションをお持ちです。サブスクリプションには...

- 質問72 10TBのオンプレミスデータファイルをAzureにアーカイブすること...

- 質問73 注:この質問は、同じシナリオを提示する一連の質問の一部です。

- 質問74 次の表に示すリソースを含む Azure サブスクリプションがありま...

- 質問75 次の表に示すAzureリソースがあります。 (Exhibit) すべてのAzur...

- 質問76 複数のAzureリージョンで複数の書き込み可能なレプリカをホスト...

- 質問77 あなたの会社の開発者は、コンテナ化されたPythonDjangoアプリを...

- 質問78 次の表に示すVirtual/WAN1という名前の基本的なAzure仮想WANと仮...

- 質問79 1,000個の10MBCSVファイルとsql1という名前のAzureSynapseAnalyt...

- 質問80 顧客情報を含み、Microsoft SQL Server、MySQL、Oracle データベ...

- 質問81 Azureサブスクリプションへのリソースのデプロイを自動化するこ...

- 質問82 システムによって割り当てられたマネージIDを使用するAzureAppSe...

- 質問83 あなたの会社には、プレミアムアプリサービスプランを介して実行

- 質問84 DB1 という名前のオンプレミスの Microsoft SQL Server データベ...

- 質問85 注:この質問は、同じシナリオを提示する一連の質問の一部です。

- 質問86 次の展示に示すバックアップポリシーを展開する予定です。 (Exhi...

- 質問87 172.16.0.0/16のIPアドレス空間を使用するオンプレミスネットワ...

- 質問88 次の表に示す Azure サブスクリプションがあります。 (Exhibit) ...

- 質問89 高さマップデータから3Dジオメトリを計算するソリューションを設...

- 質問90 Azure サブスクリプションが 5 つあります。各サブスクリプショ...

- 質問91 本番環境を管理するユーザーがAzureMFAに登録されていることを確...

- 質問92 注:この質問は、同じシナリオを提示する一連の質問の一部です。

- 質問93 あなたの会社は、ニューヨーク市、シドニー、パリ、ヨハネスブル

- 質問94 次の表に示すように、複数のインスタンスを持つカスタムデータベ

- 質問95 オンプレミスネットワークとAzureサブスクリプションがあります...

- 質問96 機密データ用のAzureStorageソリューションを計画しています a。...

- 質問97 注:この質問は、同じシナリオを提示する一連の質問の一部です。

- 質問98 複数のストレージ アカウントを含む Azure サブスクリプションが...

- 質問99 毎日 50,000 件のイベントを生成するアプリがあります。 イベン...

- 質問100 50 GB のデータベースをホストするオンプレミスの Microsoft SQL...

- 質問101 次の表に示すリソースがあります。 (Exhibit) CDB1は、継続的に...

- 質問102 WebApp1のWeb層の戦略を推奨する必要があります。ソリューション...

- 質問103 Azure Functions を使用して Azure Event Hubs イベントを処理す...

- 質問104 Applicationという名前のカスタムアプリケーションを含むAzureサ...

- 質問105 オンプレミスネットワークには、500GBのデータを格納するServer1...

- 質問106 contoso.com という名前の Azure AD テナントにリンクされている...

- 質問107 次の表に示すオブジェクトを含むRG1という名前のリソースグルー...

- 質問108 App1をAzureに移行します。App1のデータストレージがセキュリテ...

- 質問109 注:この質問は、同じシナリオを提示する一連の質問の一部です。

- 質問110 あなたの会社は、米国、ヨーロッパ、アジア、オーストラリアにオ

- 質問111 次の表に示すストレージアカウントを含むAzureサブスクリプショ...

- 質問112 Azure Cosmos DB を使用して複数の国からの売上データを照合する...

- 質問113 あなたの会社には、20台の仮想マシンを含むオンプレミスのHyper-...

- 質問114 WindowsVirtualDesktopテナントを含むAzureサブスクリプションが...

- 質問115 ある会社は、WebアプリをサポートするためにHTTPベースのAPIを実...

- 質問116 Web アプリケーションをサポートするマイクロサービス アーキテ...

- 質問117 会社のデータエンジニアリングソリューションを設計する必要があ

- 質問118 オンプレミスの MySQL データベースを Azure Database for MySQL...

- 質問119 Azure仮想マシン上にSQLServerがあります。データベースは、バッ...

- 質問120 あなたはSQLデータベースソリューションの設計を食べました。こ...

- 質問121 Azureサブスクリプションがあります。 オンプレミスネットワーク...

- 質問122 Azure Active Directory(Azure AD)テナントがあります。 Azure...

- 質問123 Application1とApplicationsという名前の2つのアプリケーション...

- 質問124 ITサポート配布グループに通知ソリューションを推奨する必要があ...

- 質問125 Azure仮想マシンを使用して、バックエンドでAzureSQLデータベー...

- 質問126 オンプレミスのストレージ ソリューションがあります。 ソリュー...

- 質問127 AzureサブスクリプションでステートレスWebアプリをホストするに...

- 質問128 5台のAzure仮想マシンへのリクエストのバランスをとるLB1という...

- 質問129 オンプレミスのデータセンターには、Microsoft SQL Server 2022 ...

- 質問130 あなたは、Azure 仮想マシンでホストされる App1 という名前の多...

- 質問131 あなたの会社には、社内で開発された 20 個の Web API がありま...

- 質問132 ハイブリッド Azure Active Directory (Azure AD) テナントにリ...

- 質問133 データベース保持要件を満たすソリューションを推奨する必要があ

- 質問134 10個のオンプレミスSQLServerデータベースを宛先として使用する...

- 質問135 次の表に示すリソースを含むAzureサブスクリプションがあります...

- 質問136 Fabrikamの認証要件を満たすには、ソリューションに何を含める必...

- 質問137 ビジネスクリティカルなデータを保存する新しいアプリを開発する

- 質問138 仮想アプライアンスを展開するオンプレミスネットワークがありま

- 質問139 AzureRBACの役割の割り当てを実装する必要があります。ソリュー...

- 質問140 App1がサードパーティのクレデンシャルにアクセスして文字列にア...

- 質問141 複数のAzureクラウドサービスを含み、トランザクションのさまざ...

- 質問142 アプリ 1 という名前のオンプレミスがあります。 デジタル画像を...

- 質問143 Azure サブスクリプションをお持ちです。サブスクリプションには...

- 質問144 次の展示に示すバックアップポリシーを展開する予定です。 (Exhi...

- 質問145 Azure サブスクリプションをお持ちです。 Windows Server 2019 ...

- 質問146 Azure Batch を使用して Linux ノードで 2 種類のジョブを実行す...

- 質問147 Azureサブスクリプションがあります。サブスクリプションには、...

- 質問148 あなたの会社には、次の表に示すインフラストラクチャがあります