- ホーム

- Microsoft

- AI-102J - Designing and Implementing a Microsoft Azure AI Solution (AI-102日本語版)

- Microsoft.AI-102J.v2025-06-23.q147

- 質問129

有効的なAI-102J問題集はJPNTest.com提供され、AI-102J試験に合格することに役に立ちます!JPNTest.comは今最新AI-102J試験問題集を提供します。JPNTest.com AI-102J試験問題集はもう更新されました。ここでAI-102J問題集のテストエンジンを手に入れます。

AI-102J問題集最新版のアクセス

「381問、30% ディスカウント、特別な割引コード:JPNshiken」

フィードバックを管理するアプリがあります。

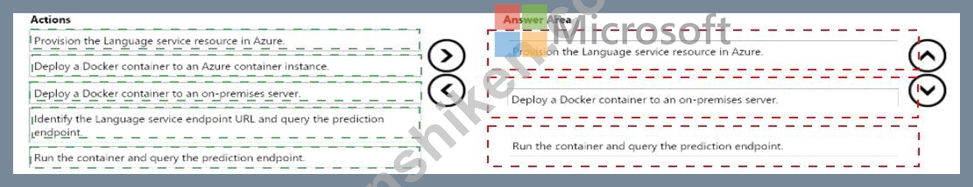

Azure Cognitive Service for Language の感情分析 API を使用して、アプリが否定的なコメントを検出できることを確認する必要があります。このソリューションでは、管理されたフィードバックが企業の内部ネットワーク上に確実に残るようにする必要があります。

どの 3 つのアクションを順番に実行する必要がありますか? 回答するには、アクションのリストから適切なアクションを回答領域に移動し、正しい順序で並べます。

注: 回答の選択肢が複数ある場合は、正しい順序を選択するとクレジットを受け取ります。

Azure Cognitive Service for Language の感情分析 API を使用して、アプリが否定的なコメントを検出できることを確認する必要があります。このソリューションでは、管理されたフィードバックが企業の内部ネットワーク上に確実に残るようにする必要があります。

どの 3 つのアクションを順番に実行する必要がありますか? 回答するには、アクションのリストから適切なアクションを回答領域に移動し、正しい順序で並べます。

注: 回答の選択肢が複数ある場合は、正しい順序を選択するとクレジットを受け取ります。

正解:

Explanation:

Provision the Language service resource in Azure.

Deploy a Docker container to an on-premises server.

Run the container and query the prediction endpoint.

According to the Microsoft documentation, the Language service is a cloud-based service that provides various natural language processing features, such as sentiment analysis, key phrase extraction, named entity recognition, etc. You can provision the Language service resource in Azure by following the steps in Create a Language resource. You will need to provide a name, a subscription, a resource group, a region, and a pricing tier for your resource. You will also get a key and an endpoint for your resource, which you will use to authenticate your requests to the Language service API.

According to the Microsoft documentation, you can also use the Language service as a container on your own premises or in another cloud. This option gives you more control over your data and network, and allows you to use the Language service without an internet connection. You can deploy a Docker container to an on- premises server by following the steps in Deploy Language containers. You will need to have Docker installed on your server, pull the container image from the Microsoft Container Registry, and run the container with the appropriate parameters. You will also need to activate your container with your key and endpoint from your Azure resource.

According

to the Microsoft documentation, once you have deployed and activated your container, you can run it and query the prediction endpoint to get sentiment analysis results. The prediction endpoint is a local URL that follows this format: http://

<container IP address>:<port>/text/analytics/v3.1-preview.4/sentiment. You can send HTTP POST requests to this endpoint with your text input in JSON format, and receive JSON responses with sentiment labels and scores for each document and sentence in your input.

- 質問一覧「147問」

- 質問1 あなたの会社は、Azureにリレーショナルデータベースを実装する...

- 質問2 ユーザーサポートシステムの言語理解モデルをトレーニングしてい

- 質問3 Azure Cognitive Service for Language リソースを含む Azure サ...

- 質問4 ワークロードのタイプを適切なシナリオに一致させます。 答える

- 質問5 FaceAPIを使用するアプリケーションを開発します。 人物グループ...

- 質問6 あなたはボットを構築していて、それは言語理解を使用します。

- 質問7 Azureサービスをアーキテクチャ内の適切な場所に一致させます。 ...

- 質問8 Azure Cognitive Searchインデックスの物理式を生成するには、テ...

- 質問9 注: この質問は、同じシナリオを示す一連の質問の一部です。この...

- 質問10 Azure Cognitive Service for Language に質問応答プロジェクト...

- 質問11 DM という名前の Azure Al Document Intelligence リソースを含...

- 質問12 Microsoft BotFrameworkComposerを使用してチャットボットを構築...

- 質問13 あなたはあなたの会社のウェブサイト上の会社のビデオの検索イン

- 質問14 注:この質問は、同じシナリオを提示する一連の質問の一部です。

- 質問15 自然言語処理を使用して、ソーシャル メディアでのブランドの認

- 質問16 ドキュメント処理ワークフローを開発しています。 財務書類から

- 質問17 次のコマンドを実行します。 (Exhibit) 次の各ステートメントに...

- 質問18 チャットボットを構築しています。 ナレッジ ベースを照会するよ...

- 質問19 半構造化され、ログの発生時に受信されるイベントログデータを保

- 質問20 注: この質問は、同じシナリオを示す一連の質問の一部です。この...

- 質問21 トランザクションワークロードのどのプロパティが、各トランザク

- 質問22 Microsoft Bot Framework を使用してボットを構築しています。 ...

- 質問23 アプリケーションの言語サービス出力を調べています。 分析され

- 質問24 どのAzureストレージサービスがキー/値モデルを実装していますか...

- 質問25 カスタム Azure Al Document Intelligence モデルを使用して契約...

- 質問26 Speech SDK を使用してアプリを構築しています。このアプリは、...

- 質問27 あなたはeコマースプラットフォームの言語理解モデルを構築して

- 質問28 Microsoft Power Biページ付けレポートを作成するには何を使用す...

- 質問29 Azure サブスクリプションをお持ちです。 Azure OpenAI モデルを...

- 質問30 顧客データと注文データを組み合わせたSQLクエリがあります a。...

- 質問31 Azure AI Language サービスを使用して機密性の高い顧客データを...

- 質問32 チャットボットを構築しています。 製品のセットアップ プロセス...

- 質問33 顔認識APIを使用して従業員の顔を認識するアプリケーションを開...

- 質問34 会話型言語理解モデルをトレーニングして、ユーザーの自然言語入

- 質問35 あなたは製品作成プロジェクトを計画しています。 多言語の製品

- 質問36 Azureサブスクリプションをお持ちの場合 ユーザーのプロンプトに...

- 質問37 注: この質問は、同じシナリオを示す一連の質問の一部です。この...

- 質問38 Azure Video Indexer サービスを使用するアプリを構築しています...

- 質問39 何千もの画像を含むライブラリがあります。 画像を写真、絵、ま

- 質問40 オブジェクト検出を実行する Custom Vision サービス プロジェク...

- 質問41 AzureCognitiveSearchを使用するエンリッチメントパイプラインを...

- 質問42 あなたは外出先での買い物プロジェクトを開発しています。 QnAMa...

- 質問43 Azure サブスクリプションをお持ちです。 Azure Al Document Int...

- 質問44 機密文書をスキャンし、言語サービスを使用してコンテンツを分析

- 質問45 回転速度、角度、温度、圧力などのエンジン センサー データを分...

- 質問46 ComputerVisionAPIの呼び出しから取得した結果を検証するための...

- 質問47 Appl という名前の Azure App Service アプリを含む Azure サブ...

- 質問48 Docker コンテナーで実行される Language Understanding ソリュ...

- 質問49 次の図に示すように、Microsoft Bot Framework コンポーザを使用...

- 質問50 カスタム ニューラル音声を使用するテキスト読み上げアプリを構

- 質問51 AzureCognitiveSearchのカスタムスキルを構築しています。 次の...

- 質問52 工場の生産ラインで生産されたコンポーネントの障害を認識するア

- 質問53 内部ドキュメントにAzureCognitiveSearchを使用するアプリケーシ...

- 質問54 All という名前の Azure OpenA1 リソースを含む Azure サブスク...

- 質問55 RG1という名前の新しいリソースグループでQnAMakerサービスをプ...

- 質問56 会話型言語理解を使用して言語モデルを構築します。言語モデルは

- 質問57 言語学習ソリューションを構築しています。 次のタスクを実行す

- 質問58 Azure Al Vision クライアント ライブラリを使用するアプリケー...

- 質問59 あなたの会社には、レポートをページングした植え替えソリューシ

- 質問60 文を正しく完成させる答えを選択してください。 (Exhibit)...

- 質問61 次の HTTP リクエストを正常に実行しました。 POST https://mana...

- 質問62 予期しないオペレーティングシステムの再起動時にデータベースへ

- 質問63 URLの配列からナレッジベースを作成するAzureWeblobを構築してい...

- 質問64 AH という名前の Azure OpenA1 リソースを含む Azure サブスクリ...

- 質問65 Azure AI Speech サービスを使用するアプリを構築しています。 ...

- 質問66 ビデオ トレーニング ソリューションのコンテンツを作成していま...

- 質問67 チャットボットがあります。 Bot Framework Emulator を使用して...

- 質問68 チャットボットがユーザー入力を別々のカテゴリに分類できること

- 質問69 タスク追跡をサポートするチャットボットを構築することを計画し

- 質問70 言語翻訳を含むアプリケーションを開発しています。 アプリケー

- 質問71 チャットボットで使用する会話フローを設計しています。 Microso...

- 質問72 あなたはチャットボットを開発しています。 次のコンポーネント

- 質問73 Azure AI を使用して、ユーザーが性的に露骨な画像を共有するの...

- 質問74 次のC#メソッドがあります。 (Exhibit) Azureリソースを米国東...

- 質問75 次の各ステートメントについて、ステートメントがtrueの場合は、...

- 質問76 予知保全を行う予定です。 100台の産業用機械から1年間IoTセンサ...

- 質問77 あなたは、公開されているWebサイトからのビデオとテキストを処...

- 質問78 次の展示に示すように、四半期ごとの現在の売上合計の視覚化を作

- 質問79 Azure Cognitive Search を使用してナレッジベースを開発してい...

- 質問80 あなたは、テキストを英語からスペイン語に翻訳する App1 という...

- 質問81 CS1 という名前の Azure Al Content Safety リソースを含む Azur...

- 質問82 Azure Blob StorageからBLOBを自動的に削除するには、何を使用す...

- 質問83 注:この質問は、同じシナリオを提示する一連の質問の一部です。

- 質問84 アプリケーションのテキスト分析出力を調べています。 分析され

- 質問85 Azure OpenAI を使用して応答を生成するチャットボットがありま...

- 質問86 Azure Al とカスタム トレーニングされた分類子を使用して画像内...

- 質問87 Azure Stream Analyticsのストリーム処理ジョブでクエリを定義す...

- 質問88 AzureCognitiveSearchを使用してナレッジベースを開発しています...

- 質問89 ビデオ コンテンツを分析して、特定の会社名への言及を特定する

- 質問90 Microsoft Bot Framework を使用してボットを構築しています。 ...

- 質問91 プログラムでAzureCognitiveServicesリソースを作成するには、次...

- 質問92 休暇申請に使用されるアプリの会話型インターフェイスを設計して

- 質問93 QnAMakerアプリケーションを使用するチャットボットがあります。...

- 質問94 注:この質問は、同じシナリオを提示する一連の質問の一部です。

- 質問95 テスト用のローカルデバイスとオンプレミスのデータセンターで、

- 質問96 100個のチャットボットがあり、それぞれに独自の言語理解モデル...

- 質問97 Azure portal を使用して、Azure Cognitive Search サービスのイ...

- 質問98 あなたは、テキストを音声に変換するソーシャル メディア拡張機

- 質問99 あなたはチャットボットを構築しています。 ボットが会社の製品

- 質問100 file1,avi という名前の 20 GB のファイルがローカル ドライブに...

- 質問101 あなたはeコマースチャットボットの言語理解モデルを構築してい

- 質問102 既存のAzureCognitiveSearchサービスがあります。 画像やPDFとし...

- 質問103 文を正しく完成させる答えを選択してください。 (Exhibit)...

- 質問104 Azure Cognitive Search ソリューションと、ソーシャル メディア...

- 質問105 Microsoft Bot Framework SDK を使用してボットを構築します。 ...

- 質問106 製品作成プロジェクトの画像をアップロードするためのコードを開

- 質問107 URLからアクセスできる領収書があります。 Form RecognizerとSDK...

- 質問108 コンテナーの基本イメージを含む Host1 という名前の Docker ホ...

- 質問109 注: この質問は、同じシナリオを示す一連の質問の一部です。この...

- 質問110 次の表に示すファイルを含むローカル フォルダーがあります。 (E...

- 質問111 自然言語処理を使用して、ソーシャル メディアでのブランドの認

- 質問112 Microsoft Bot Framework SDK を使用してボットを作成します。 ...

- 質問113 文を正しく完成させる答えを選択してください。 (Exhibit)...

- 質問114 チケットを購入するための言語理解モデルを構築しています。 Pur...

- 質問115 AI Language カスタム質問回答サービスを使用するアプリがありま...

- 質問116 カスタムのFormRecognizerモデルを作成します。 次の表に示すよ...

- 質問117 AM という名前の Azure OpenAI リソースを含む Azure サブスクリ...

- 質問118 どのデータベーストランザクションプロパティが、個々のトランザ

- 質問119 非リレーショナルデータベースの特徴は何ですか?

- 質問120 FaceAPIへの呼び出しを開発しています。呼び出しは、employeefac...

- 質問121 会話型言語理解モデルを構築しています。 モデルが次のサンプル

- 質問122 Azure OpenAI Studio を使用してチャットボットを構築します。 ...

- 質問123 チャットボットを構築しています。 性的に露骨な表現を含むメッ

- 質問124 次のデータソースがあります。 財務:オンプレミスのMicrosoftSQ...

- 質問125 文を正しく完成させる答えを選択してください。 (Exhibit)...

- 質問126 AH という名前の Azure OpenAI リソースを含む Azure サブスクリ...

- 質問127 OpenAI1 という名前の Azure OpenAI リソースと User1 という名...

- 質問128 音声サンプルをSpeechStudioプロジェクトにアップロードする必要...

- 質問129 フィードバックを管理するアプリがあります。 Azure Cognitive S...

- 質問130 注:この質問は、同じシナリオを提示する一連の質問の一部です。

- 質問131 データ操作言語(DML)の例はどれですか?

- 質問132 JavaScript でボットを構築します。 Azure コマンド ライン イン...

- 質問133 文を正しく完成させる答えを選択してください。 (Exhibit)...

- 質問134 あなたはテキスト処理ソリューションを開発しています。 以下に

- 質問135 Microsoft BotFrameworkSDKを使用してチャットボットを構築して...

- 質問136 PDF ファイルとして保存されたプレス リリースのコレクションが...

- 質問137 スキャンした請求書の画像 5,000 枚を含むファイル共有がありま...

- 質問138 AzureCognitiveSearchでインデックスを作成するための管理ポータ...

- 質問139 注:この質問は、同じシナリオを提示する一連の質問の一部です。

- 質問140 文を正しく完成させる答えを選択してください。 (Exhibit)...

- 質問141 あなたは、フランス語圏およびドイツ語圏の発信者からの電話を受

- 質問142 Translator1 という名前のマルチサービス Azure Cognitive Servi...

- 質問143 データウェアハウスの主な目的は何ですか?

- 質問144 ta1 という名前の言語サービス リソースと vnet1 という名前の仮...

- 質問145 Azure SQL DatabaseなどのAzureで提供されるPaaS(Platform as a...

- 質問146 Azure AI Vision API を使用して画像を分析するアプリを開発して...

- 質問147 Azure サブスクリプションをお持ちです。サブスクリプションには...